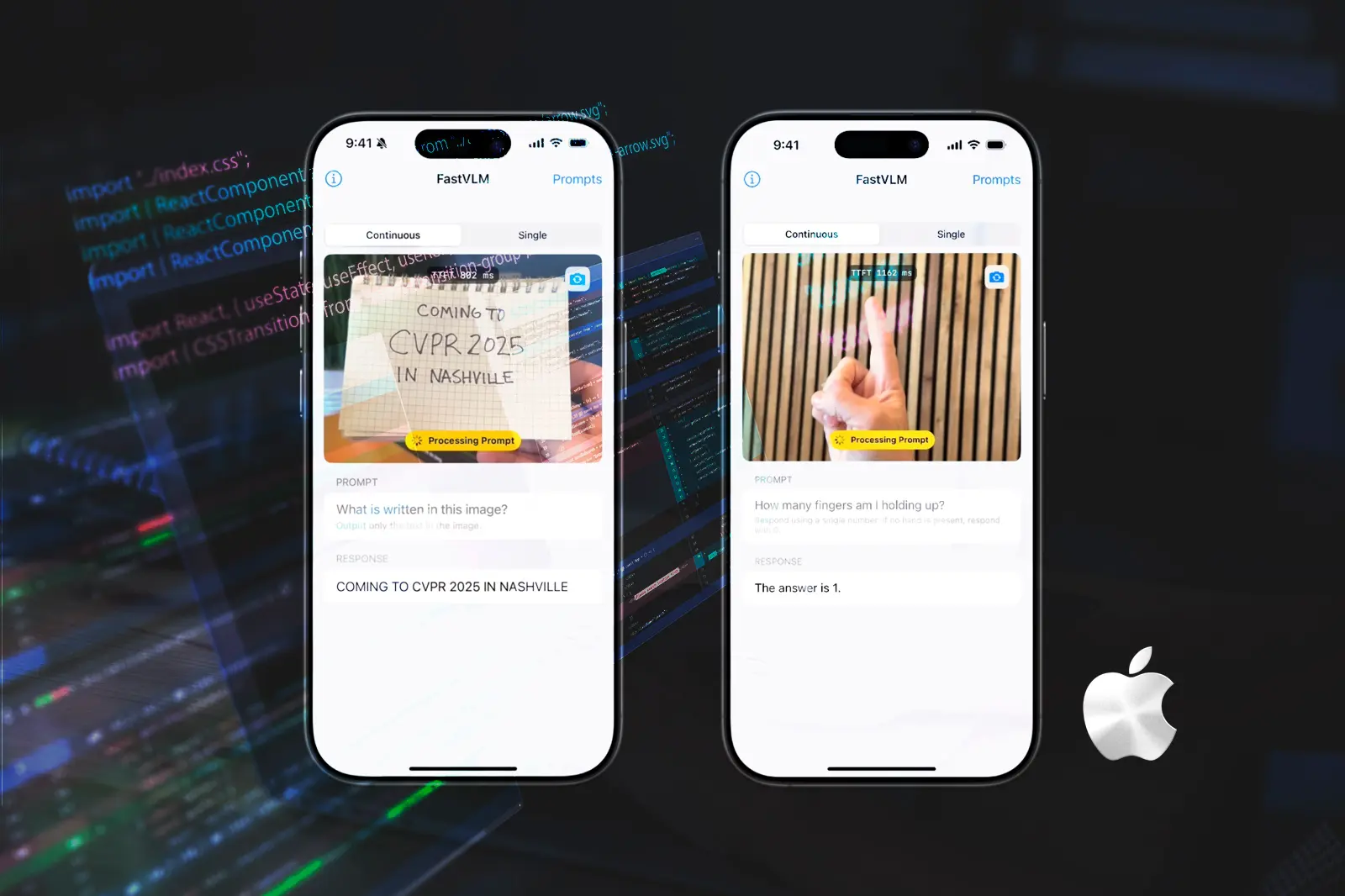

FastVLM Review: Apple’s Vision-Language Model for Real-World Business Scenarios

Regina Zaltsman

Google Ads Certified Expert

When Apple dropped FastVLM, I didn’t want to just read the benchmarks — I wanted to see how it holds up in the messy reality of business workflows. So I spent the past few weeks testing it with clients: from logistics docs to customer service cases. The results? Some parts blew me away, others reminded me that even Apple’s AI still has blind spots.

What FastVLM Actually Does

Think of it this way: a regular LLM, like ChatGPT, only understands text. You give it words, and it gives you words back. A vision-language model like FastVLM is different — it can look at an image and read text at the same time, then combine those two streams of information into one answer. So instead of just reading a paragraph about a shipping receipt, it can actually “see” the scanned document, spot the numbers, and connect them to the text description. That’s the real leap here: it doesn’t just talk about data, it actually interprets visuals directly alongside language.

FastVLM is Apple's open-source model that processes images and text simultaneously. The technical breakthrough lies in their FastViTHD encoder, which reduces the computational bottleneck that typically makes high-resolution image processing painfully slow.

Here's what the numbers mean in practice: Apple's smallest variant outperforms LLaVA-OneVision-0.5B with 85x faster Time-to-First-Token (TTFT) and 3.4x smaller vision encoder. When we tested this with a client's document processing workflow, what used to take 3-4 seconds per image now happens in under 200 milliseconds.

Real Testing

Document Analysis Success Story

Last month, we helped a mid-sized logistics company implement FastVLM for their shipping documentation process. Previously, their team manually reviewed thousands of delivery receipts, invoices, and damage reports daily.

The setup: We deployed FastVLM-1.5B on their existing Mac mini workstations (that costs about 600 eur per iitem). The model analyzes scanned documents, extracting key information while cross-referencing images of packages and signatures.

Results after 3 weeks:

- Processing time reduced from 2 minutes per document to 15 seconds

- Error rate dropped from 12% to 3% (humans miss details in poor-quality scans)

- Staff redirected to exception handling rather than routine data entry

The surprise finding: FastVLM excels at reading damaged or partially obscured text in images—something we didn't expect from the technical specifications.

Where It Struggles

Not everything worked perfectly. We tested FastVLM on complex architectural drawings for a construction client, and the model struggled with technical symbols and measurement annotations. The lesson: while powerful for general document processing, specialized technical imagery still requires domain-specific training.

Business Applications We've Successfully Implemented

Customer Service Enhancement

For a telecommunications client, we built a visual troubleshooting system. Support teams can now interpret diverse user submissions: screenshots, error logs, product photos, and fragmented text descriptions.

Implementation details:

- Customers upload photos of their router/modem LED status

- FastVLM analyzes the visual indicators alongside the text description

- System provides specific troubleshooting steps or escalates to appropriate specialist

- Average resolution time decreased by 40%

Financial Document Processing

A regional bank implemented FastVLM for loan application processing. The system processes loan applications containing scanned PDFs, bank statements with charts, and hand-filled forms, ensuring all compliance checkboxes are met before approval.

Technical approach:

- FastVLM-7B model running on Mac mini workstations

- Custom training on anonymized banking documents

- Integration with existing loan management software via API

- Compliance team focuses on exceptions rather than routine reviews

The Privacy Advantage. On-Device processing

And here’s where things get really interesting: FastVLM doesn’t have to live in some distant cloud service. We can actually deploy it on a company’s own server — or even directly inside a customer-facing app on their device. In practice, that means no waiting on third-party APIs, no unpredictable bills per request, and no sensitive data flying around the internet.

From my perspective working with clients, this changes the game. Imagine a banking app that can instantly read and validate scanned documents right on your phone. Or a logistics company running FastVLM on their internal Mac servers, processing thousands of receipts without ever sending private data outside the building. We’re talking about AI that isn’t just powerful, but portable — something businesses can fully own, integrate into their existing workflows, and control from start to finish.

Real Compliance Benefits

Working with healthcare clients, we've seen how moving inference to the device reduces legal exposure, simplifies compliance, and reclaims architectural control. When patient data never leaves the local device, HIPAA compliance becomes significantly more straightforward.

Practical example: A medical practice now uses FastVLM to analyze patient-submitted photos (skin conditions, injury documentation) alongside symptom descriptions. The entire process happens locally on iPads, with no cloud transmission required.

How We See Implementation

When new AI models come out, most companies ask: “But how do we actually use this in our day-to-day business?” That’s exactly the question we help our clients answer. With Apple’s FastVLM, the opportunities aren’t abstract — they’re concrete problems we can solve right now.

Imagine a real estate agency where agents spend hours manually checking scanned contracts and ID documents. With FastVLM running locally, those documents can be read, validated, and filed automatically, while the agent focuses on closing deals.

Or take a healthcare clinic that receives patient photos through their app. Instead of uploading them to some cloud service (and worrying about compliance nightmares), FastVLM can analyze those images directly on the iPad. The doctor gets structured insights instantly, without any sensitive data leaving the device.

Even customer service teams can benefit. Think of telecom companies drowning in blurry router photos and half-written support tickets. With this model built right into their own helpdesk system, the AI can “see” the photo, read the notes, and suggest the next steps — cutting resolution time dramatically, without the hidden API costs that come with OpenAI or other cloud-heavy providers.

And this is the key point: by moving away from per-request APIs and tapping into Apple’s optimization, businesses finally get predictable costs and independence. No more paying by the token, no more surprise bills for high-volume tasks. Just AI that runs on your existing devices and infrastructure, fully under your control.

At Zaltsman Media, we look at FastVLM not as another shiny tech toy, but as a way to help companies own their AI. To integrate it into real workflows, cut costs where cloud models would eat budgets, and give teams tools that work quietly in the background — where they make the most impact.

Technical Insights: How FastViTHD Changes the Game

FastViTHD is a hybrid convolutional-transformer architecture comprising a convolutional stem, three convolutional stages, and two subsequent stages of transformer blocks. This hybrid approach explains why it performs so well on business documents—the convolutional layers handle layout and structure, while transformer blocks manage semantic understanding.

What this means for implementation:

- Better performance on structured documents (invoices, forms, reports)

- Efficient handling of mixed content (charts within text documents)

- Scalable across different image resolutions without performance degradation

Integration with Business Systems

API and Workflow Integration

We've developed custom connectors for popular business systems:

CRM Integration: Automatically process customer-submitted images and append analysis to support tickets ERP Connection: Link visual quality control data with inventory management systems

Document Management: Enhance existing document workflows with automated categorization and data extraction

Security and Compliance Framework

In Europe, the conversation around AI isn’t just about innovation — it’s about compliance. With GDPR setting strict rules on how personal data is handled (and fines that can reach millions of euros), keeping information local is not a “nice to have,” it’s a survival strategy.

This is where Apple’s on-device approach really shines. By processing documents, photos, and text directly on your own servers or devices, companies drastically reduce the risk of data leakage and third-party exposure.

Our recommended approach for EU businesses:

- Local-first AI: Sensitive data never leaves your infrastructure or the customer’s device.

- Clear audit trails: All processing stays under your control, making regulatory checks easier.

- Vendor independence: No need for additional agreements with external AI providers or uncertainty about where data is stored.

For healthcare clinics, financial institutions, and even SMEs handling customer data, this isn’t just about privacy — it’s about peace of mind. AI can become a tool for efficiency without creating compliance headaches.

Looking Forward: Strategic Implications

Market Positioning

The global multimodal AI market size was valued at USD 1.6 billion in 2024 and is estimated to grow at CAGR of 32.7% from 2025 to 2034. Early adopters are positioning themselves advantageously in this expanding market.

Competitive Landscape

While competitors focus on cloud-scale models, Apple's approach enables smaller businesses to deploy sophisticated AI without massive infrastructure investments. We're seeing companies with 50-500 employees successfully implementing vision AI capabilities that were previously accessible only to tech giants.

Challenges and Honest Limitations

What We've Encountered in Real Deployments

Model size constraints: The 0.5B model works well for simple tasks but struggles with complex reasoning. We typically recommend the 7B model for business-critical applications.

Training data requirements: Custom implementations need domain-specific examples. Plan for 2-4 weeks of data preparation and model fine-tuning.

Integration complexity: While the model itself is straightforward, connecting it to existing business workflows requires careful planning and often custom development.

How ZaltsMan Media Can Help

Based on our hands-on experience with FastVLM implementations, we offer:

Technical consultation: Assessment of your specific use cases and ROI potential Custom development: Building FastVLM applications tailored to your business workflows Training and support: Helping your team understand and maintain vision AI systems

Getting Started

The practical path forward depends on your current technical capabilities and business priorities. We typically recommend a structured approach: start with pilot testing, measure results carefully, then scale successful implementations.

Download Apple's FastVLM demo and test it with 10-20 examples of your actual business documents or images. This hour of testing will tell you more about practical applicability than any theoretical analysis.

FastVLM represents a significant step toward practical, privacy-preserving AI that businesses can actually deploy and control. The technology is ready, the costs are manageable, and the competitive advantages are real.

The question isn't whether vision AI will transform business operations—it's whether you'll lead that transformation or react to it.

Regina Zaltsman

Google Ads Certified Expert with over 18 years of experience